Privacy-Preserving AI

The Privacy Issues of AI Industry

The key privacy problems in AI and machine learning areas are multifaceted and involve concerns related to data collection, model training, inference, and deployment. Here are some of the primary privacy issues:

Data Collection and Storage: AI systems often require large amounts of personal data, which can include sensitive information such as medical records, financial data, and personal communications. The collection, storage, and use of this data raise significant privacy concerns. For instance, data collected for one purpose may be repurposed for another, which can violate privacy expectations and regulations. Data Anonymization is another problem during the collection and storage phases. Even when data is anonymized, there is a risk of re-identification, where anonymized data can be matched with other data sources to reveal individuals' identities.

Model Training and Deployment: During the training process, models can inadvertently memorize and leak sensitive information from the training data. This can happen through overfitting or improper handling of the data. During the model usage, attackers can infer sensitive information about the training data by querying the model. For example, membership inference attacks determine whether a specific data point is part of the training set.

Data Breaches and Security: AI systems are susceptible to data breaches and cyber-attacks, which can result in unauthorized access to sensitive data. Meanwhile, protecting the models themselves from theft or tampering is crucial, as compromised models can be used to leak or manipulate sensitive data.

Regulatory Compliance: Ensuring compliance with regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) is complex. These regulations impose strict requirements on data privacy, user consent, and data usage. Managing data privacy across different jurisdictions with varying regulations can be challenging for global AI applications.

Solutions

Fully homomorphic encryption is essential in AI and machine learning to enable secure and privacy-preserving data processing. FHE allows computation on encrypted data without the need to decrypt it, ensuring that sensitive information remains hidden throughout the entire data processing pipeline. By using FHE, AI and machine learning workflows can harness the potential of shared data in a secure and inclusive manner, safeguarding privacy while maximizing the insights gained from multiple data sources. This aligns with the increasing public demand for privacy preservation. FHE addresses the critical need to train AI models with encrypted data sets without exposing the secret key, providing a security gap when processing data and ensuring security and privacy at rest, in transit, and during processing.

Furthermore, FHE enables practical and efficient privacy-preserving machine learning (PPML) training and facilitates the development of privacy-preserving AI models, which is highly relevant in the context of increasingly stringent privacy regulations and the societal demand for privacy protection. The potential of FHE in securing machine learning workflows lies in its ability to maintain data security and privacy at every stage of processing, thereby fostering trust and confidence in AI and machine learning applications.

Privacy-Preserving Model Inference

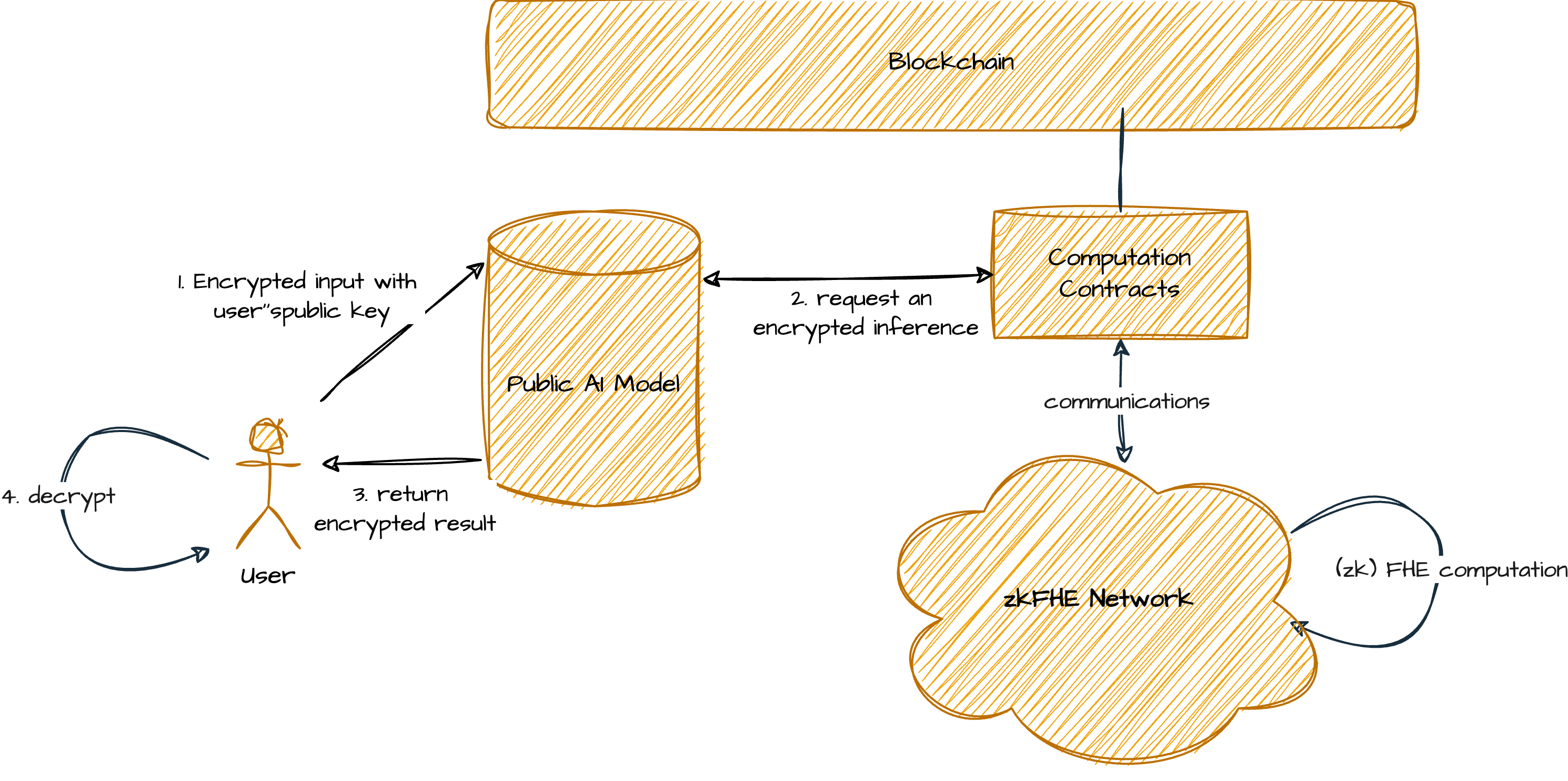

Fully Homomorphic Encryption (FHE) enables privacy-preserving model inference by allowing users to encrypt their private data before sending it to a public AI model hosted by a service provider. Here’s how it works:

Users encrypt their private data locally using FHE, ensuring it remains confidential during transmission. The encrypted data is then sent to the service provider with the AI model.

The service provider, equipped with FHE capabilities, computes on the encrypted data directly. This means the AI model can perform inference and generate encrypted results without accessing the plaintext data.

After computation, the service provider sends back the encrypted result to the user.

Using their private decryption key, the user decrypts the result to obtain the inference outcome. Throughout this process, the user’s original data remains encrypted, ensuring data privacy and compliance with regulations.

FHE for privacy-preserving model inference offers benefits like end-to-end data privacy and trust between users and service providers. However, challenges include computational demands and secure management of encryption keys. As encryption technologies evolve, FHE holds promise for enhancing data security and enabling safe deployment of AI in sensitive applications.

Federated Learning

Federated Learning (FL) is a distributed machine learning approach that allows for the training of a global model across multiple decentralized devices holding local data, without sharing the data itself. This technique preserves data privacy by keeping the data on local devices and only sharing model updates with a central server, which aggregates these updates to refine the global model. This process iteratively improves the global model through repeated cycles of local training and central aggregation, making it ideal for scenarios where data privacy and security are critical.

FL is particularly useful in applications such as healthcare, finance, mobile and IoT services, and smart cities. It enables collaborative model training while adhering to data protection regulations and reducing the risk of data breaches. Despite its advantages, Federated Learning faces challenges such as privacy and security concerns. Combining Federated Learning (FL) with Fully Homomorphic Encryption (FHE) can enhance privacy and security in distributed machine learning. In this approach, model updates are encrypted using FHE before being transmitted to the aggregator. FHE allows computations to be performed on encrypted data, so the aggregator can aggregate the encrypted model updates without ever decrypting them. This ensures that sensitive information remains confidential throughout the process.